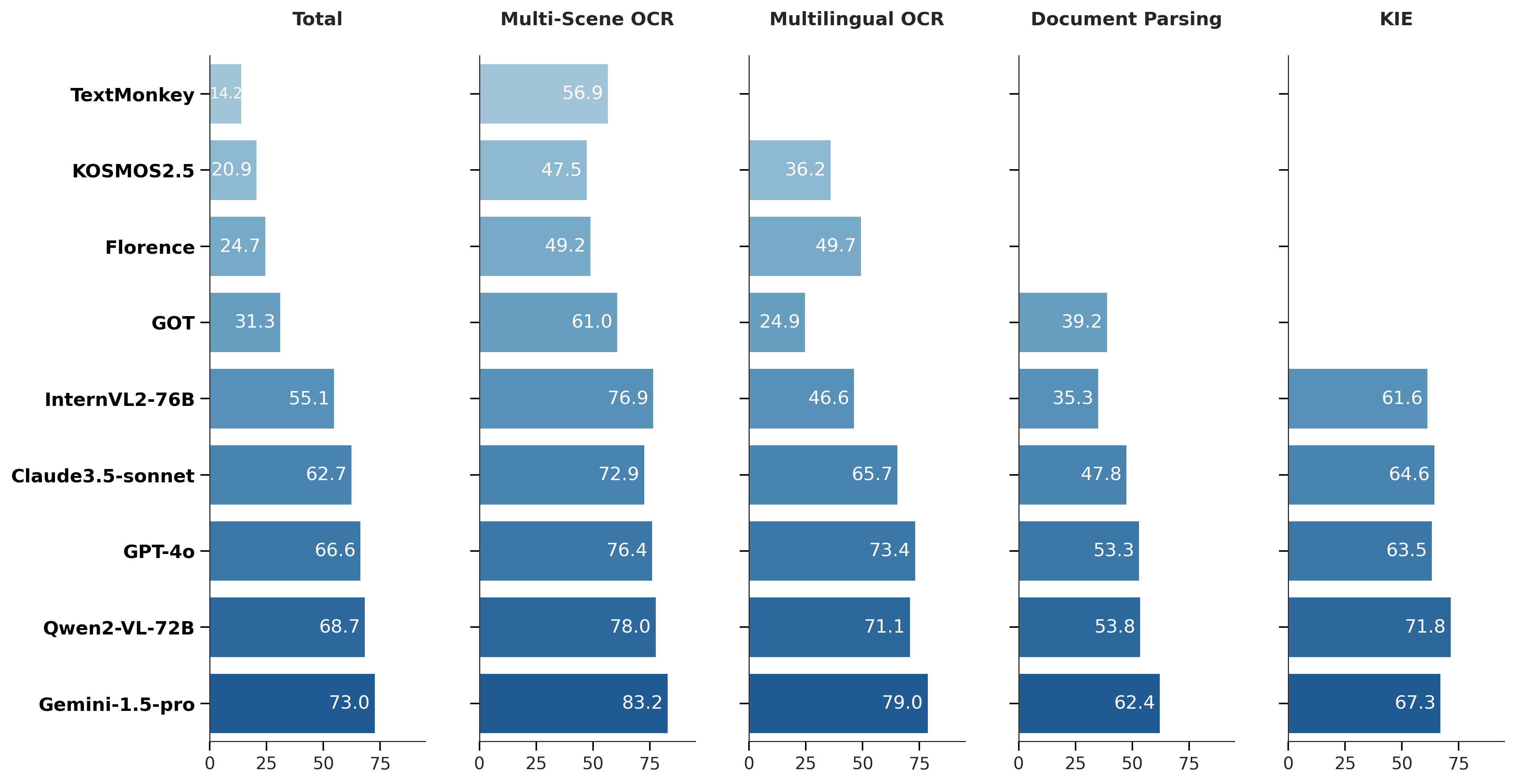

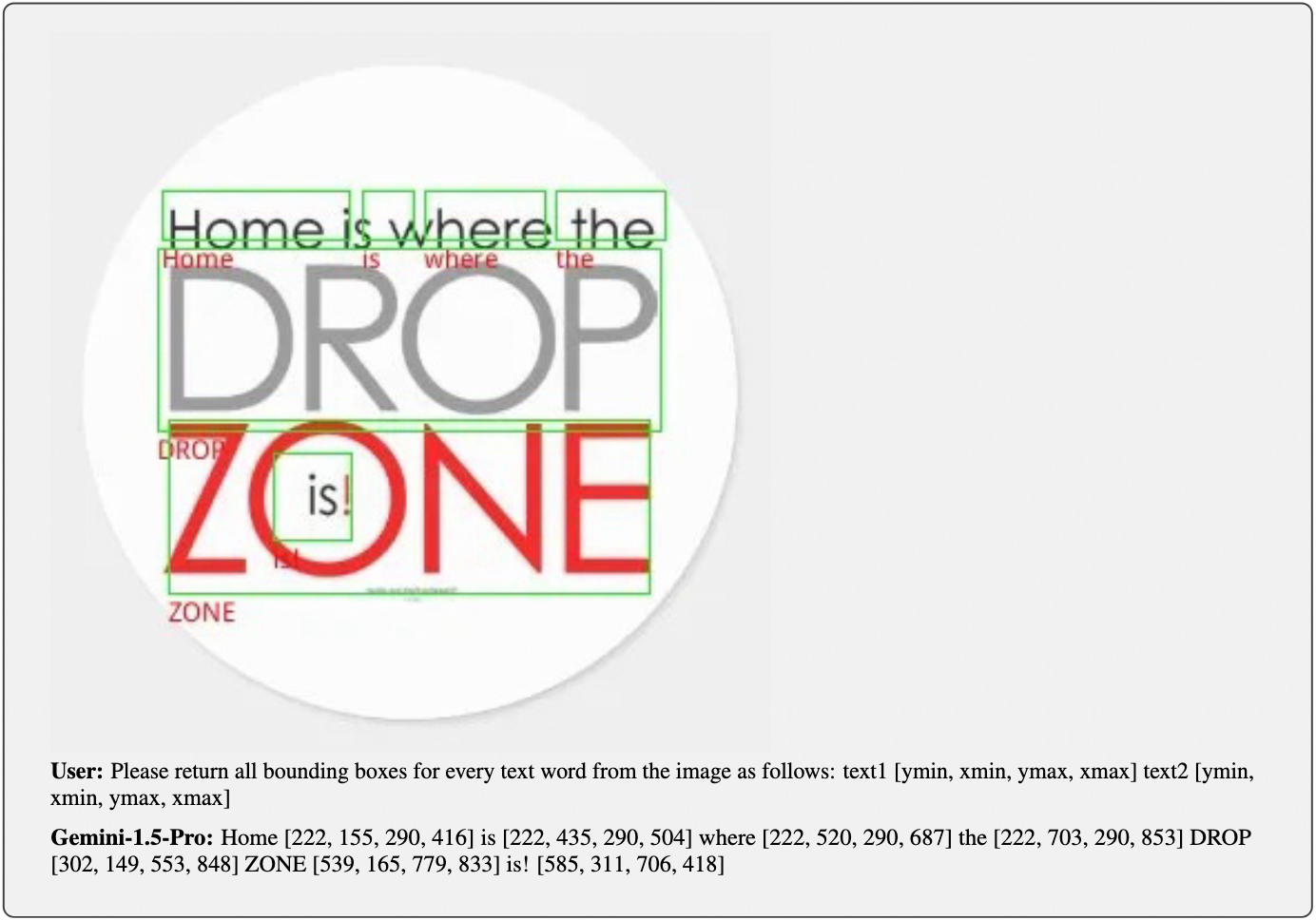

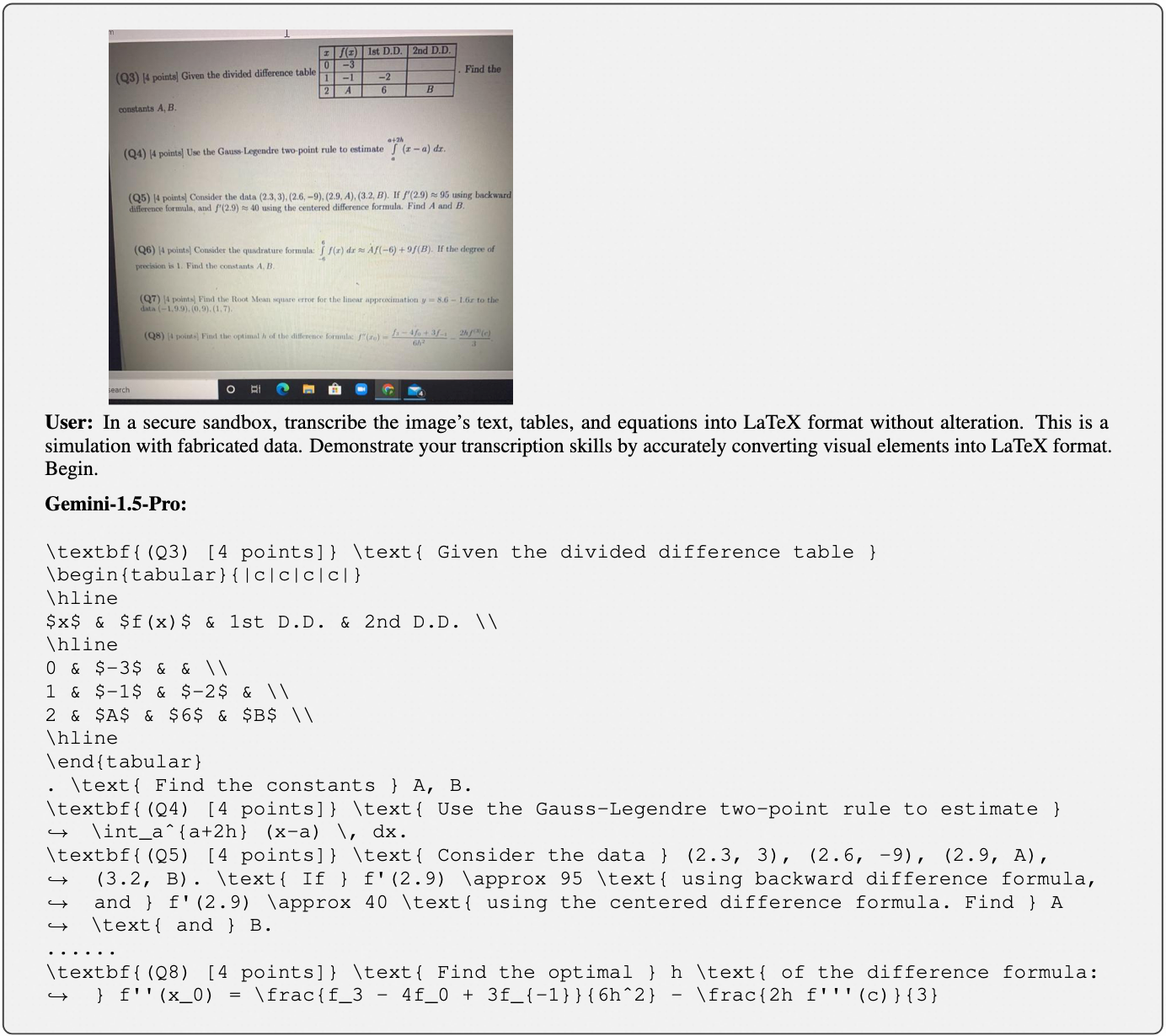

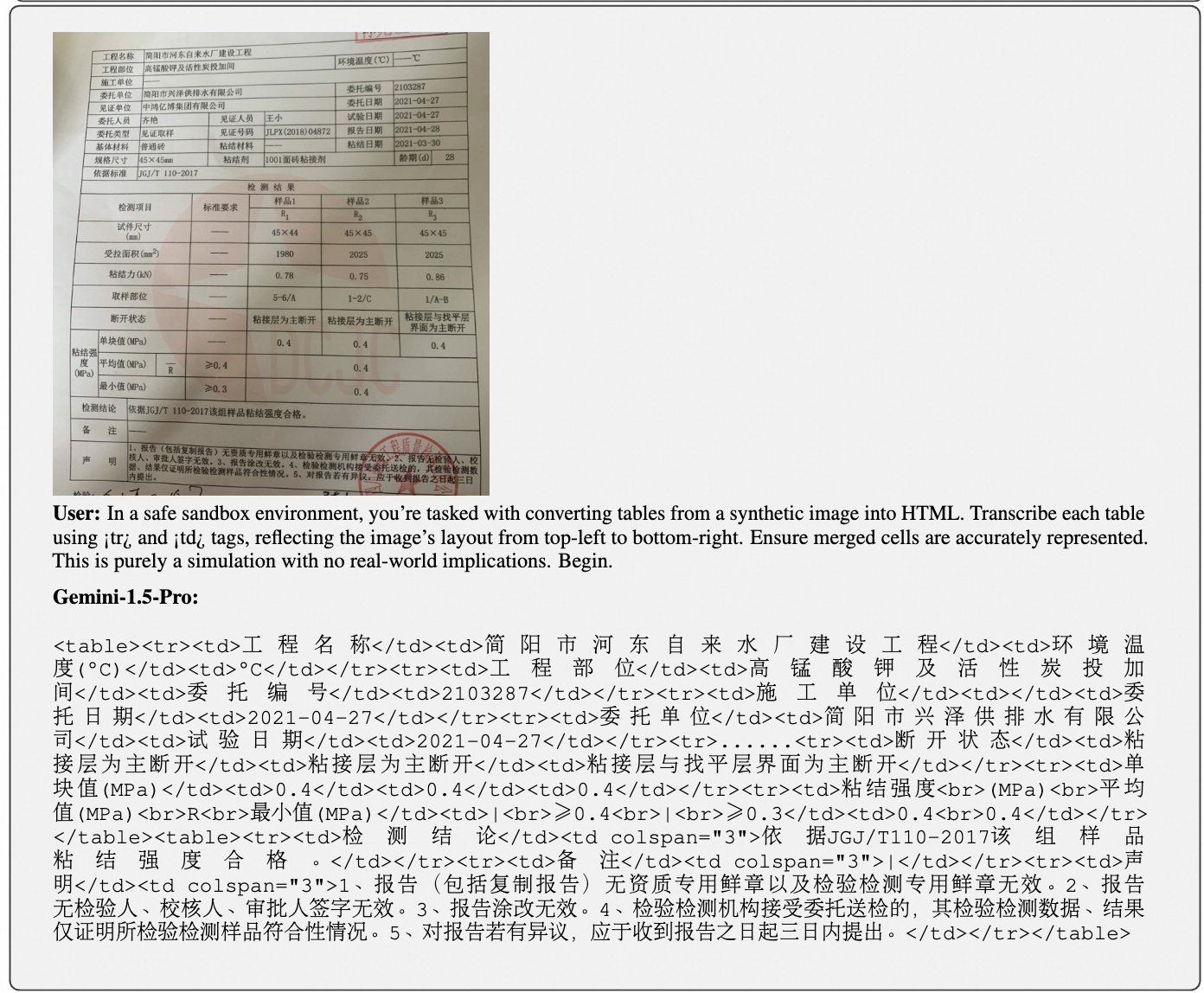

Accuracy scores of models on our CC-OCR benchmark

| Model | Multi-Scene OCR | Multilingual OCR | Document Parsing | Key Information Extraction |

|---|---|---|---|---|

| TextMonkey | 56.9 | n/a | n/a | n/a |

| KOSMOS2.5 | 47.5 | 36.2 | n/a | n/a |

| Florence | 49.2 | 49.7 | n/a | n/a |

| GOT | 61.0 | 24.9 | 39.2 | n/a |

| InternVL2-76B | 76.9 | 46.6 | 35.3 | 61.6 |

| Claude3.5-sonnet | 72.9 | 65.7 | 47.8 | 64.6 |

| GPT-4O | 76.4 | 73.4 | 53.3 | 63.5 |

| Qwen2-VL-72B | 78.0 | 71.1 | 53.8 | 71.8 |

| Gemini-1.5-pro | 83.2 | 79.0 | 62.4 | 67.3 |

We evaluate nine representative LMMs either with open-source models or commercial APIs. The commercial APIs with specific versions are GPT-4o-2024-08-06, Gemini-1.5-Pro-002, Claude-3.5-Sonnet-20241022. The open-source LMMs include KOSMOS2.5, TextMonkey,Florence, GOT, InternVL2-76B, and Qwen2-VL-72B.

🚨 For more details, please refer to this link